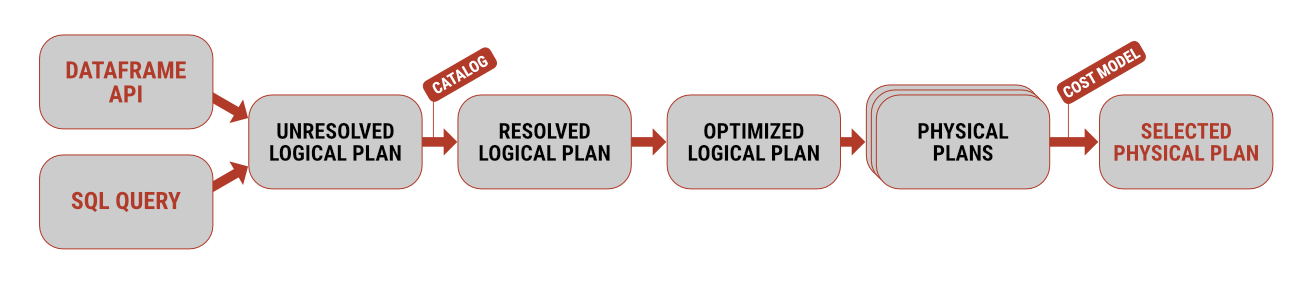

Apache Spark / Dataframe API vs. SQL

Working with data using Apache Spark is possible in several ways. If you come more from a software development background, you will likely lean towards using the Dataframe API

Working with data using Apache Spark is possible in several ways. If you come more from a software development background, you will likely lean towards using the Dataframe API

In older versions, limiting sorted data by the number of rows had to be implemented using a nested query. This first sorted the table, and only then was it possible to filter by count.

The option to define a PL/SQL function or procedure inside an SQL query might seem strange at first glance, but it has one significant advantage – the database does not need to switch context, which in certain cases leads to a massive increase in performance.